Becoming One with the Machine

Malt Liquidity 130

I have a bit of a joke that I’m a naturally occurring generative AI. For those of you who have read my around-the-clock, randomly disbursed X ramblings, sat with me over a drink, or had the misfortune of being in a group message with me, you certainly know what I’m talking about — that harebrained tendency to rapidly fill up a space with stream of consciousness and connecting topics by seemingly throwing darts.

Remember a couple of things I wrote about in the last AI post:

My own philosophy is that the human brain is the most powerful pattern recognition machine to ever exist…

I specifically disagree with “anti-tech revolution” thinking because of both the naturalist fallacy and the probability that, even in a timeline reset, it simply plays out the same way. The answer, like at the end of Deus Ex, is not to listen to Tracer Tong and reset society, but to merge with Helios. It’s the same reason I look down on the idea of “monk mode” and using a “dumb phone.” The smartphone is the most powerful hardware ever invented in human history, and you’re not going to use this to accelerate yourself further? Of course, we all know the bad side of too much screen time. But a primitive version of humans merging with a greater technical intelligence is combining human intuition with technological tools to go even further. This is a net good thing!

Let’s go a bit further. If the brain is the supercomputer of pattern recognition, the eyes are supercomputers of data processing. Critically, these are two separate-but-related instances, as eyes are capable of independently processing and driving an action without necessarily consciously processing it. We can call the independent subconscious action “reaction”, but obviously when the brain and the eye merge, that subconscious activity is where we get “intuition” from. This is why processing information visually is always faster than, say, listening to a lecture — you will simply never get as much information from an audiobook in the same time that you could have spent reading it, both as a function of words per minute and at the rate that information seeps into your brain. Recall my chess example:

But how much processing power, code, and data is required to program a fork into Fritz or teach AlphaZero a pin? A moderately bright 5 year old could figure out the concept seeing it just twice and start to utilize it in their own games. They wouldn’t even need the full game data, just the positions.

Notably, chess is powered by visual pattern recognition, which is why blindfold chess becomes ludicrously demanding of your conscious processing and working memory capacity. You have to store the entire game data and maintain its state, you can’t just look at the board to visually refresh, a much more efficient and lighter process.

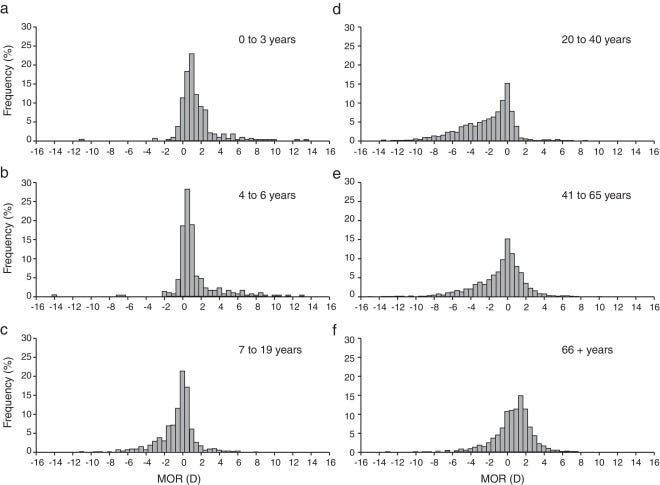

Turbocharging yourself doesn’t mean that you directly have to utilize the tech in a process. You can also borrow its processing to rewire yourself. Take, for example, how I modified my vision. I’ve had pretty bad vision my entire life — if you take this chart,

I’m on the far left tail from 7 to 19 (the only time I’ve been on that side of the curve.) For a good chunk of my life, I wouldn’t have been able to tell between early career Michael Jackson and late career Michael Jackson from the three-point-line of a basketball court because of how badly my eyes were affected by sunlight, along with my vision. Needless to say, I relied on contacts and glasses to get anything done even though my reaction speed is extremely fast. From 13 onwards, I wasn’t able to play basketball again without glasses — until a little over a decade later, where all of a sudden, I could play full speed pickup without any vision aid at all. What happened?

First, let’s talk about Charles Oliviera, former UFC belt-holder. Take a gander at this:

Charles Oliveira is nearly blind when he takes off his glasses, but that hasn’t stopped the reigning UFC lightweight champion from becoming one of the very best fighters in the world today.

You may have noticed throughout each and every fight week involving Oliveira that “Do Bronx” walks around with his patented glasses. Those are not for show and certainly something that Oliveira needs for everyday life. But when he steps inside of the cage those glasses are removed and Oliveira is fighting with impaired vision…

“I see three [faces]. If I hit the middle one, that’s fine,” said Oliveira. “I think keep this same technique. Three guys for me is perfect. If I hit the one in the middle, it’s good. I’ll tell you the truth, I’m a guy who has a lot of faith in God and it’s no joke. If I take my glasses off, I only see 50 per cent but it never hindered me in a fight.”

As anyone who has done a high-speed, intensive activity knows, if you’re actually seeing it, it’s too late to react. Whether it’s a one-tap while playing Counter Strike or taking a basketball shot, you haven’t consciously sized up that angle or decision — you just do it. Do Bronx operates in the same way — he’s acutely trained his own visual complex to react to the blurred blocks subconsciously to a champion-tier fighting level. Certainly, visual clarity helps with this. Hitting a baseball is one of the hardest things to do in all of sports, and it’s no coincidence that their eyesight is notoriously better than the population’s:

The results of visual acuity testing were most surprising. Certainly, we felt that professional baseball players must have excellent visual acuity, but we were surprised to find that 81% of the players had acuities of 20/15 or better and about 2% had acuity of 20/9.2 (the best vision humanly possible is 20/8). The average visual acuity of professional baseball players is approximately 20/13…

Our final area of focus has been on contrast sensitivity. Due to the importance of this function to both visual acuity and visual function on the playing field (i.e. tracking a white ball against the stands or against a cloudy sky), we used three tests of contrast sensitivity. Our results indicate that baseball players have significantly better contrast sensitivity than the general population.

So how do we explain that obviously you cannot consciously think about how to hit a 98-mph-fastball? Well, here’s the key — not all pitches are fastballs. The initial velocity and spin trajectory must be instantly approximated to even be able to whiff at the proper time. Thus we see the symbiotic relationship between the muscle memory of the brain but the processing of the eye — it’s unlikely that the brain plays a single part in a baseball swing (and why when the brain does come into play, it messes things up — choking and the yips stem from the pure eye process being disrupted.)

So what does this have to do with AI? Well, at one point I got the opportunity to hop in a self-driving Waymo car. While you’re in it, you see a screen that tracks the “vision” of the vehicle — stop signs, approximate path, other moving objects.

Note the visual representation of larger objects — they’re blocks. The traffic cones are likely pyramids, and the humans likely cylinders, but for visual clarity, they’re given a pretty overlay. Think about this like Do Bronx — do we need to read the branding on the trousers or the make of the car to know how to avoid getting crushed by an oncoming force? When I naturally walk outside and squint, rather than allowing my brain to “correct” for the approximate shape my eye has inputted to a brain, do I really need to see every leaf on a tree to ride a bike without hitting it? Suddenly, I find myself able to do activities (or even just walk around in bright sunlight) that I never thought possible without glasses up until I had the idea to think a bit more like a Waymo car. By refactoring how I thought about blur in precise vision versus approximate vision, I can all of a sudden pick out beer bottles from across the bar that I clearly can’t read, just because I recognize the shape of it. My biggest fear used to be my glasses breaking — now, I can write this blog post without any vision aid whatsoever. It’s similar to how that old Facebook boomer meme works — your brain corrects the approximation to the familiar, recognized pattern at a blindingly fast rate, well beyond the speed of consciously working out each word.

It’s shockingly intuitive — why we can mimic AI systems in our own brain is precisely because whenever these AI systems are built, it's to incorporate how we interpret things currently to the best of our ability at the launch-point. Thus, generative text is an attempted version of coding, say, my own stream of consciousness ranting and single data point extrapolation ad infinitum. (Though I don’t need prompting, just the occasional redirect and hard stop.) Computer vision just attempts to generalize and harmonize the relationship between approximate and precise viewing and using compute to approximate the intuitive process as closely as possible (as AI problems scale with compute, not efficiency.) Even deep learning neural networks are based off of early-brain development “sponge brain” — after all, you learned your first language from scratch by immersing in it, didn’t you? When we contextualize the process in something we can grasp, we can then better apply it to our own day-to-day to streamline stuff we really ought to not bother with. AI is the biggest opportunity to exponentiate your abilities: every time you get a paradigm shift, the meta warps, but the skill tree doesn’t reset. It’s not unbiased from the previous meta. The question becomes, do we want to use this to replace what we’re good at — finding nuance quickly through little data synthesis, making esoteric connections — with a pale imitation, or do we want to scale simple processes that are extremely time consuming:

Where the human starts to unravel is when they have to put a bunch of complex processes together. Chess is a lot more than tactics, which is why people who are good at online bullet who have never played OTB likely don’t understand the full game at all. Computing power allows for all these processes to be handled simultaneously

Thus, the theoretical thinker is rewarded: an AI coding assistant turbocharges the developer with enough of the base skills and the creativity to reframe their idea of "writing code" to "designing the process with which I do it". Beyond “prompt engineering” or “productivity hacks”, the core competency becomes “how do I think systemically and translate this into precise language such that a computer designed to interpret it does what I want it to?”

Frankly, that sounds like a ton of fun, and I can’t wait for more. The game hasn’t changed — it just got more fierce.

You could say your personality/disposition is your "meta prompting"? But then, this aspect of humans never decays over time and must be reprompted, unless you'd say mental illness is exactly this. I'm not enough of a prompt engineer to really compare, though...

On the topic of eyesight, have you seen EndMyopia?